ROBOT GRASP SYNTHESIS AS A

PATTERN RECOGNITION PROBLEM

Abstract

The goal of this research is to enable robots to grasp objects autonomously, by imitation of the human behaviour. First, a set of objects are grasped by the user by means of a robot teleoperation device, thus creating a set of training examples for the system. These data are used to create a model for the user grasping behaviour. The model is based on point attributes that describe each contact point and set attributes that describe each set of two contact points (a two jaw parallel gripper is assumed). Once the model has been created, the robot can perform grasps autonomously for new objects, by choosing, among all possible grasps, the one more similar to the training examples.

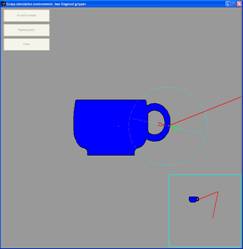

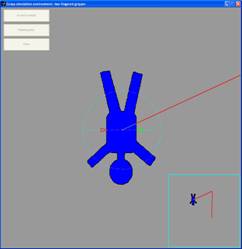

Simulation environment

A simulation environment has been developed in Matlab, in order to check the performances of our system. Using this environment is quite easy: just give some grasp training examples so that the system can create a model and then ask the system to perform grasps of other objects autonomously. You can try different grasping behaviours and check whether the system is able to imitate them or not.

The full Matlab code and the documentation can be downloaded from the links below:

Experimental setup

Some experiments have been carried out using a Mitsubishi SCARA robot equipped with a two jaw parallel gripper and a video camera (to detect the contour of the objects to be grasped). Please have a look at the videos below to check the behaviour of the system:

Weka datasets

The Weka environment has been used to test different machine learning algorithms, in order to select those more appropriate for robot grasp learning. All the tests have been performed against a database of 200 grasp examples. The different datasets and their descriptions are available for further tests; please download them from the link below:

- Robot grasp datasets (weka .arff format).